What can AI learn from the street?

A short report by Noortje Marres (University of Warwick) on our engagement events and community and partner feed-back on the AI in the Street project.

Last month the AHRC/BRAID funded project AI in the street hosted three public events to present and discuss the project's findings, which I am delighted to say have now been published by our partner Careful Industries.

On 5 September 2024, we hosted a community event in Coventry with participants in AI-in-the-street observatories from Edinburgh, Coventry, Cambridge and London. The programme also included a screening of the documentary film “AI in the street: drone observatory” by Thao Phan and Jeni Lee, which explores a delivery drone trial

in Logan (Australia) and the impact of the introduction of these AI-based technologies in “streets in the sky” over Logan on communities on the ground.

Community participants also gave feed-back on the project findings. We discussed the designed invisibility (Figure 1) of AI in the street: all observatories found that AI-enabling technologies like cameras, sensors, drones and smart traffic lights in the street are almost always located beyond lines of sight and out of earshot . In the street, AI is not just invisible for technical reasons, it is actively designed to be invisible from the perspective of the street, which poses challenges for public engagement with AI. As one community participant put it: "[AI] feels very hidden away, we don't know where it is." AI in the street lacks a social interface.

Another participant noted that once you know where to look for AI in the street it manifests as an uncertain presence, as it is often impossible to ascertain whether the tech is working or not: "In my street, we have a semi-functional environmental sensor: someone backed into it with their car, so we're not sure if it still works." In some streets, it looks like AI-enabling infrastructure is not consistently maintained, raising critical questions as to whether the technologies in question are installed to serve the community, or are being trialled for audiences located elsewhere.

A round table discussion with project partners considered the policy implications of the project findings. Calum McDonald (Scottish AI Alliance) drew attention to the relative lack of attention in innovation policy to date for the evaluation of community benefits of AI:

"Impact assessments are obviously key for environmental protection and securing data rights, but they do not necessarily cover community benefits. More needs to be done to demonstrate how AI is meaningful for people."

Our partner from Transport for West Midlands stressed the importance of public engagement for the development of viable use cases for AI in the street:

"Understandably, engineers are especially enthusiastic about things you can put wheels on, but people vote with their feet. In Coventry, we must ask: who are the users? For this, too, data is valuable: we can use data to make visible user journeys. To do this well, public engagement is required."

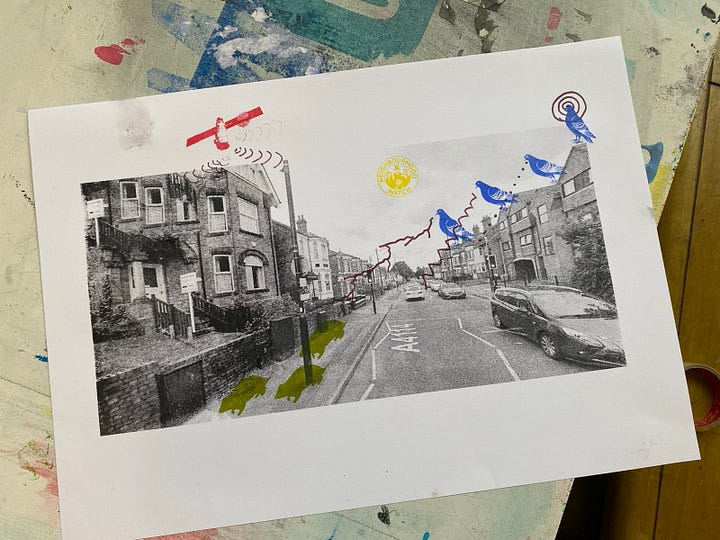

Finally, as the event was hosted in the beautiful art and community space Common Ground, we were lucky to engage in some creative activity alongside our discussions: Under the guidance of Kate Rossin and Yasmine Boudiaf, we created screen prints of the city streets in which we researched everyday manifestations of AI during these last months (Figure 2).

On 12 September 2024, AI in the street co-lead Mercedes Bunz (KCL Digital Humanities), ) hosted a public debate about AI in the street at the London Science Gallery. Professor Bunz kicked off the evening by asking the audience: "What does AI in the street make you think about?” The two loudest answers were: "public security" and "surveillance."

Computer scientist Theo Damoulas (University of Warwick) responded to this by pointing out that AI is used for a far more diverse range of purposes in the street, from the analysis of geo-economic data to decide the placements of shops, to the monitoring of sewage composition, the installation of broadband cables with capabilities for locative data capture, and the use of remote sensing for navigation and other analytic purposes.

Designer and theatre-maker Janet Vaughan (Talking Birds), asked about the relative lack of public awareness of these often more practical uses of AI, observing that the media portrayal of AI leads many people to think first and foremost of Sci Fi and aliens. Partly as a consequence, it seems everyday people are not seeing AI as a practical tool for fixing everyday problems, and this makes it still more difficult for them to make sense of the presence of AI in the street.

Researcher and data ethicist Sam Nutt (London Office for Technology and Innovation) reflected on the gap between the enthusiasm for AI solutions in local government and the difficulty in finding positive and impactful use cases:

“Local authorities want to use AI. But they are finding it hard to realise the impacts that are sometimes promised. Councils need to start with the outcome they want to achieve and not the technology. Doing this well will involve connecting with publics - residents, people in the street - who have sometimes well-founded concerns about negative impacts of technology."

AI & society scholar Maya Indira Ganesh (University of Cambridge) discussed the findings of the Cambridge everyday AI observatory conducted in collaboration with critical disability scholar Louise Hickman (University of Cambridge). Ganesh noted how trials with AI technology in this historical university town clarify the challenge of managing different speeds in AI innovation in the street. As she put it: "In Cambridge, data flows a lot better than we humans do." She also reflected on the unequal impacts of the digital kerb, mentioning how blister pavement originally installed in the street to support the visually impaired are now used by delivery bots to find their way, with likely negative consequences for accessibility (Figure 3).

During our public policy workshop at NESTA, Design Informatics scholar and sociologist Alex Taylor (University of Edinburgh) and Careful Industries' Dominique Barron guided us through the project findings and their implications for public policy. Building on the policy paper written by AI in the street co-lead Rachel Coldicutt (Careful Industries) and which outlines the policy recommendations of the project, Barron put the notion of "innovation place" centre stage. She highlighted how the designed invisibility of AI in the street not only leads to missed opportunities for enabling everyday people to contribute to AI-based innovation in the street, but may also unintentionally breed public mistrust (Figure 4).

During the ensuing discussion, workshop participants reflected on the regulatory complexity of the street as an innovation environment, as it is often made up of both public and private land, for which very different regulatory requirements apply regarding data protection and urban planning, something that everyday publics are often not aware of.

Miriam Levin (Demos) emphasised the role of structural changes in the relations between publics and local government in the UK and how these impact the democratic legitimacy of innovation. She noted the unexceptionality of AI in this respect: the issues raised by AI innnovation in the street mirror those sparked by Low Traffic Neighbourhoods. In the latter case, trust deficits between local government and everyday publics hamper the ability of the former to act as public representatives, with no other representatives of collective interests readily available to take their place in fragmented communities .

We also discussed the gaps between discourse and reality when it comes to the societal benefits of AI innovation. On the one hand, societal benefit is a clear and well-established priority in innovation policy, and place-based innovation has attracted significant interest and support during the last years, with technological innovation increasingly valued as a mechanism for “creating vibrant, liveable communities” by research funders (UKRI, statement on community-led research and innovation, October 2023). On the other hand, our project found that even as AI trials and deployments are increasingly common in city street across the UK, it often remains difficult for everyday publics to discern the benefits of the presence of AI for their own communities.

What is more, in several cases the uses of AI do not seem particularly innovative from the perspective of the street: AI is often used to enhance existing socio-technical systems, some of which are widely known to have negative consequences for society, such as smart advertising (in Edinburgh) and the car system (in Coventry). Here, public engagement with AI innovation could provide a way of creating incentives for government and industry to match their ambitions in technical innovation with commitments to social innovation.

However, our project also showed that, once prompted, people in the street have little difficulty to imagine socially meaningful and beneficial uses of AI in the street, from the use of traffic monitoring to enable the real-time regulation of car traffic along busy commuter roads to the use of delivery drones as part of social services for the elderly.